stevevw

inquisitive

- Nov 4, 2013

- 16,623

- 1,892

- Gender

- Male

- Faith

- Christian

- Marital Status

- Private

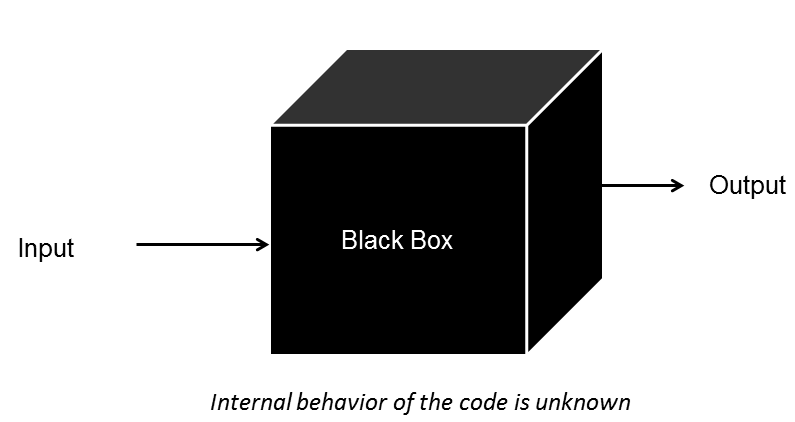

As far as I unerstand Ai platforms like ChatGPT will contain a great amount of human information generally. So all religious beliefs and their morals will be included. But so will atheism and all intellectual movements throughout history up to our present Postmodernist society at least in the West. All political philosophies will be included such as Communism, Totalitarianism.What worldview should AI align itself with? This conversation will happen with or without us. The picture is taken from Dr Alan Thompsons article on AI alignment. It highlights the importance to think about the starting points AI will have when interacting with or making decisions for humans.

View attachment 331345

Sources:

My twitter post on alignment

Dr Alan Thompson article on alignment

I would say it may be heavily influenced by todays thinking because thats the most current which may cause a bias in the programming by emphasing thing in todays social and cultural norms. I read somewhere that the programmers are working on trying to get the programme to think in real world terms when providing answers to practcial questions. So I am not sure what that means like whther it can think for itself and have a certain leaning towards maybe prgressive or conservative thinking.

Perhaps the programe may eventually be able to predict where human thinking will be in the future based on our history and trends. Perhaps comparing to past Empires then projecting a modern equaivelent. Perhaps it may be atheist as it seems many people are rejecting God. Therefore it may have a bias towards non belief, material and naturalistic conceptions of reality.

It makes one wonder what will happen in the near future as tech moves so fast. Will Ai take over and we live in a virtual reality where we almost become part machine having depended on them and interweaving them into our lives. Will it get to a point where they tell us what to do, how we should setup society. Maybe Ai becomes capable of creating Ai. Then where in trouble lol.

Upvote

0