You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Should Amazon Web services remove Twitter?

- Thread starter tall73

- Start date

- Sep 23, 2005

- 31,992

- 5,854

- Country

- United States

- Faith

- Christian

- Marital Status

- Married

Is this another woe is me and my conservative allies thread?

Did you read the whole thread?

Upvote

0

- Mar 17, 2015

- 17,202

- 9,205

- Country

- United States

- Faith

- Christian

- Marital Status

- Married

Really great question.

Yesterday, without trying to consider Amazon Web Services in more far reaching terms of doing Right or Wrong (which we can address!), I just observed this practical side of Amazon Web Services' choice, but it also has a moral dimension --

Is it moral for us to force a business -- that is, other American running their own business -- to operate their business according to our personal preferences, past the letter of the law?

Of course, that answer is 'no'.

Here's the business side of their own (right to choose) choice:

"Amazon is a for-profit company.

To host Parler over the next few months or year they would make some modest amount of money .... Not a lot of money.

But, hosting Parler at the same time Parler is hosting people planning to break the law in a serious way and do violence might expose Amazon to being sued in a huge lawsuit ....

$10,000 profit isn't much for risking what could even be $10 million or $100 million of liability from Parler's members actions over time ...."

Of course, this same kind of argument can apply regarding twitter (a vastly more profitable customer for them to serve), and I bet Amazon Web Services is going to think about that also (that's my guess).

Probably they will simply require Twitter to make an effort. (just my guess)

But, Twitter has evidently been making some effort. Just not enough I bet we agree.

Yesterday, without trying to consider Amazon Web Services in more far reaching terms of doing Right or Wrong (which we can address!), I just observed this practical side of Amazon Web Services' choice, but it also has a moral dimension --

Is it moral for us to force a business -- that is, other American running their own business -- to operate their business according to our personal preferences, past the letter of the law?

Of course, that answer is 'no'.

Here's the business side of their own (right to choose) choice:

"Amazon is a for-profit company.

To host Parler over the next few months or year they would make some modest amount of money .... Not a lot of money.

But, hosting Parler at the same time Parler is hosting people planning to break the law in a serious way and do violence might expose Amazon to being sued in a huge lawsuit ....

$10,000 profit isn't much for risking what could even be $10 million or $100 million of liability from Parler's members actions over time ...."

Of course, this same kind of argument can apply regarding twitter (a vastly more profitable customer for them to serve), and I bet Amazon Web Services is going to think about that also (that's my guess).

Probably they will simply require Twitter to make an effort. (just my guess)

But, Twitter has evidently been making some effort. Just not enough I bet we agree.

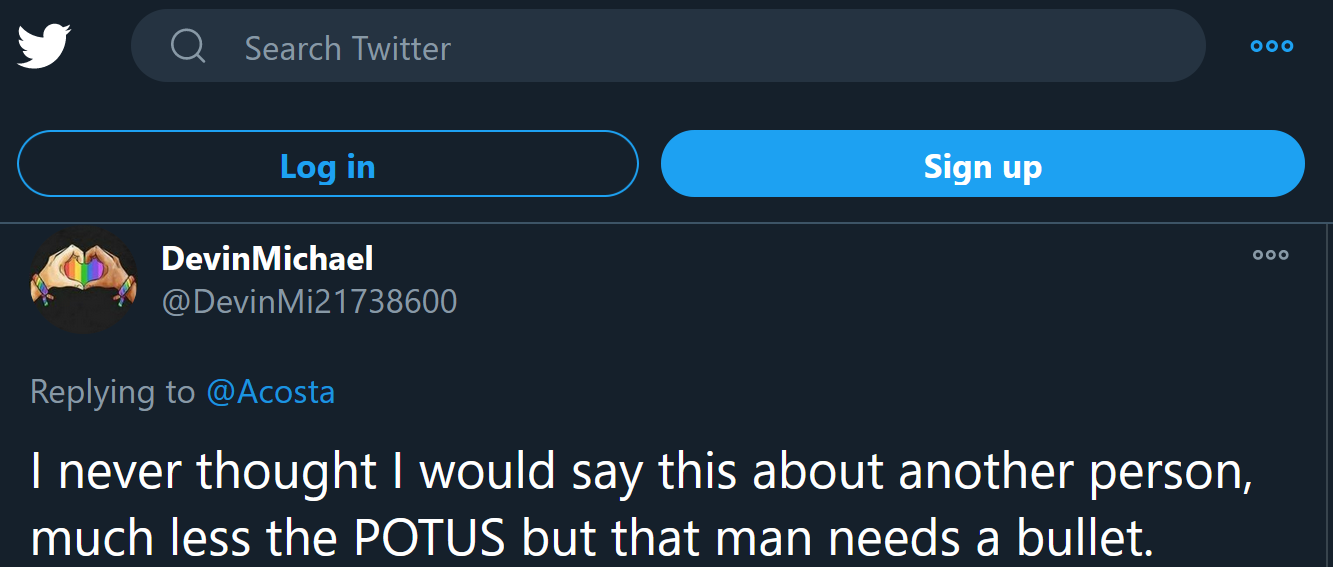

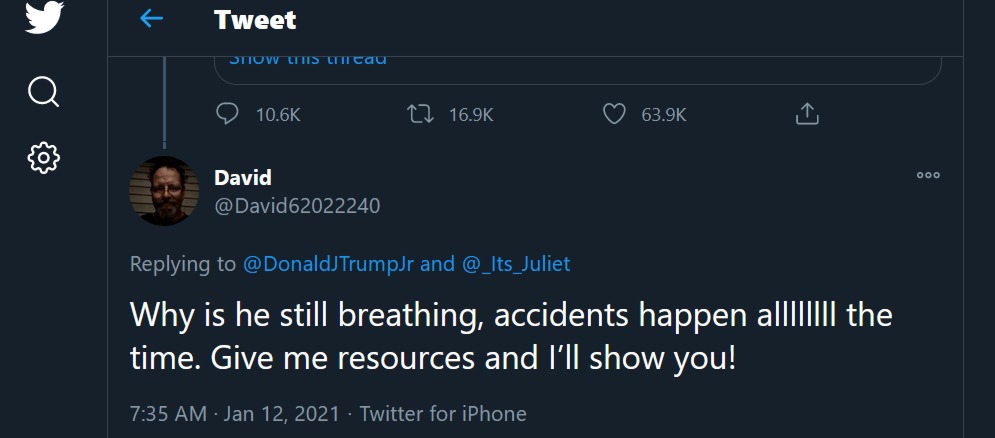

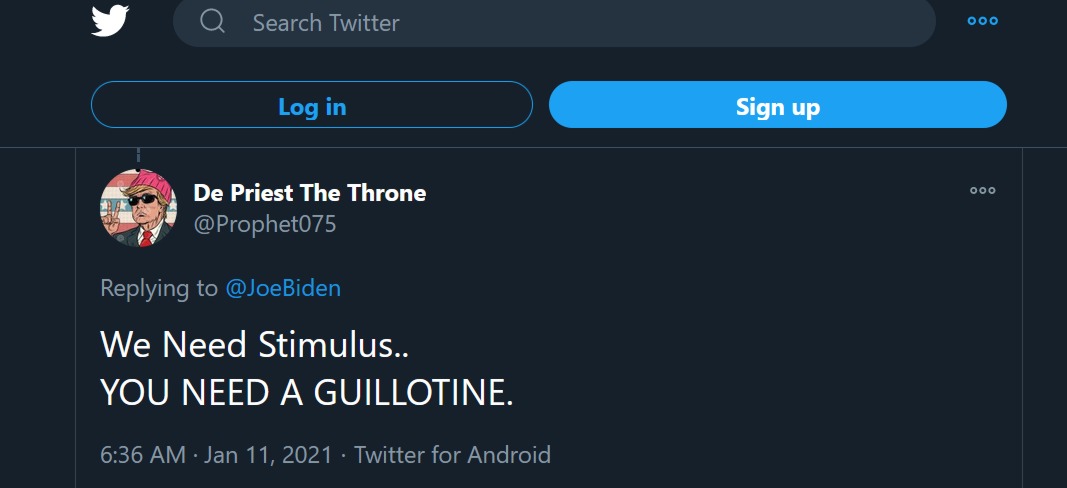

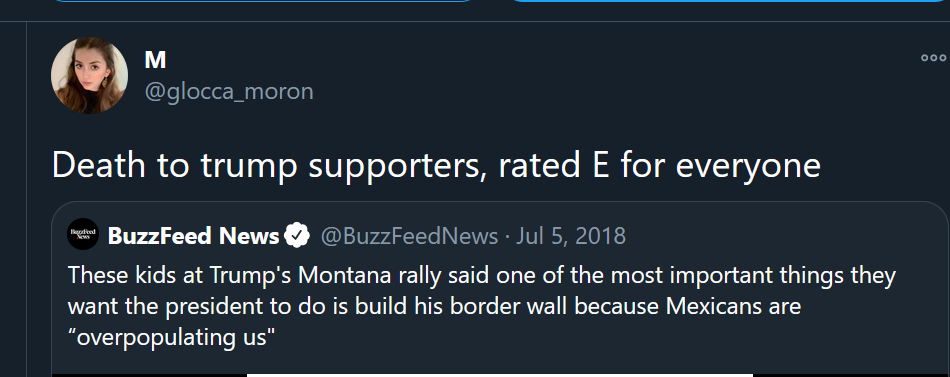

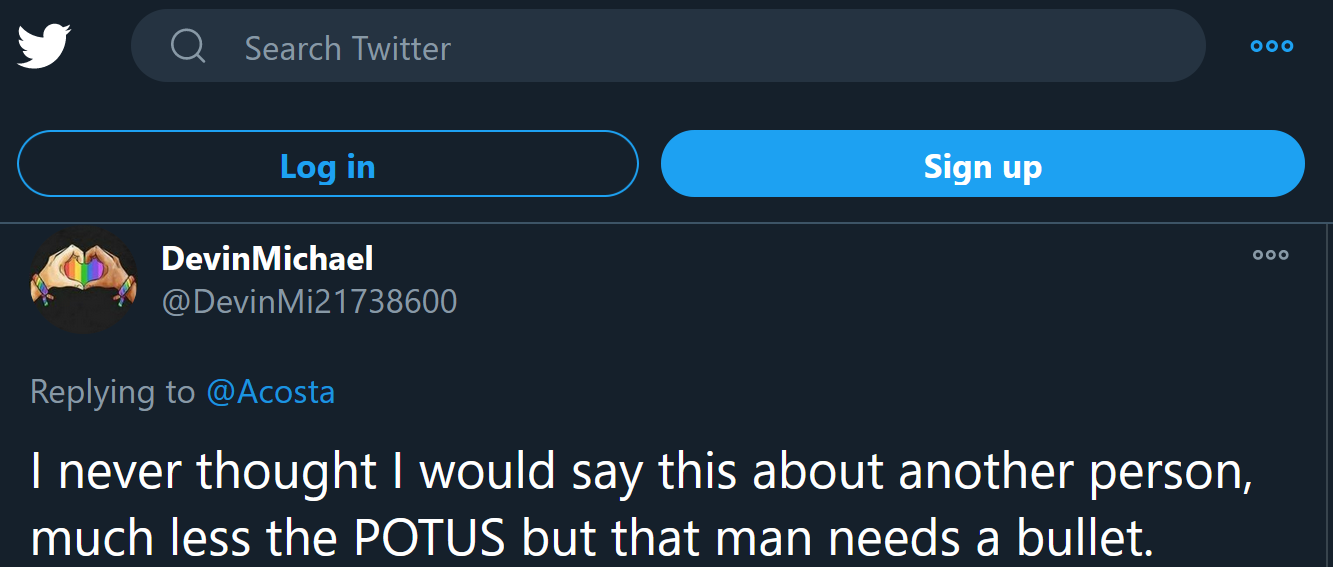

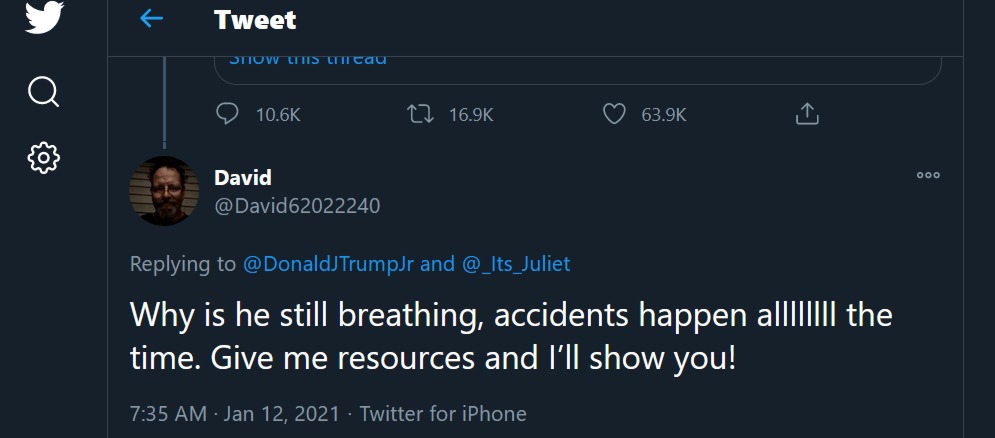

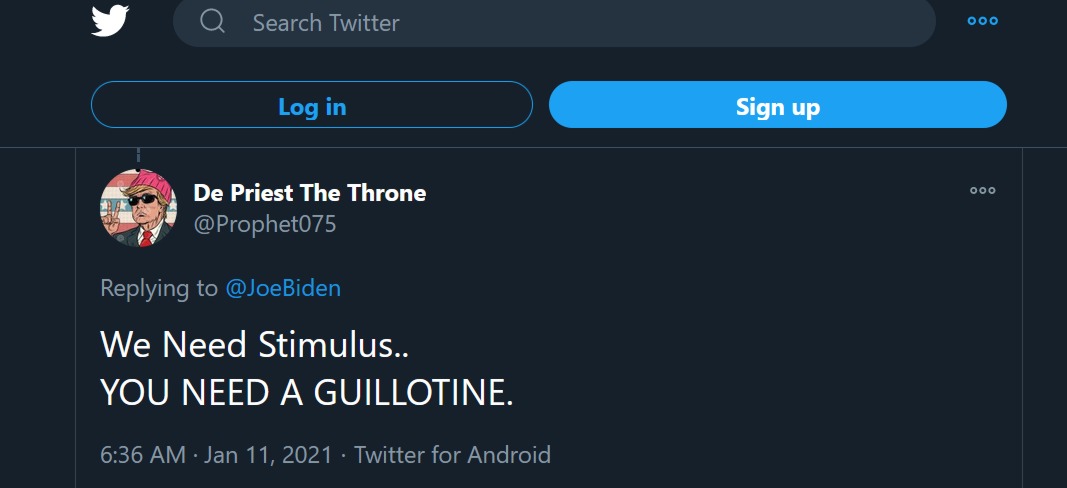

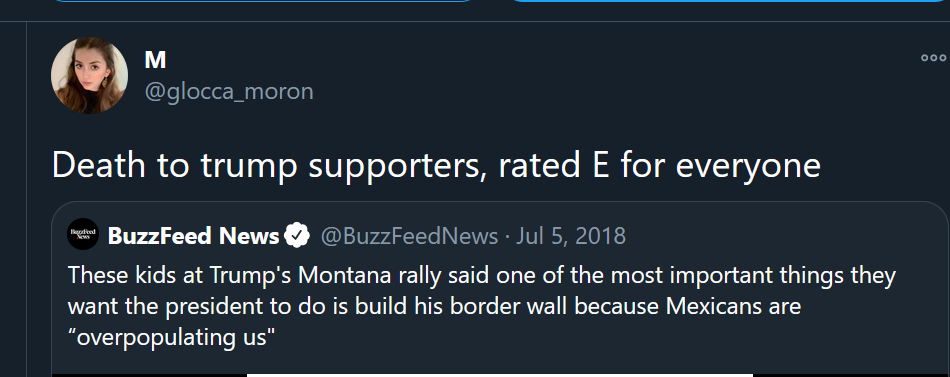

Amazon recently terminated service for Parler on the basis of their inability to remove incitement, calls to violence, etc. which have been leading to problems in American political life.

Should they do the same for Twitter? Twitter continues to display incitement, calls for violence, etc. And Twitter likewise uses AWS.

Twitter Selects AWS as Strategic Provider to Serve Timelines | Amazon.com, Inc. - Press Room

Or should they not have removed Parler? What is your stance?

I have come across a few examples of such postings. See what you can find.

I did not see a way to report these, but that may be because I don't have an account at Twitter. If someone does, please feel free to report them.

In context "He" appears to be Jack Dorsey.

Upvote

0

- Jan 24, 2008

- 9,566

- 2,493

- Country

- United States

- Faith

- Pentecostal

- Marital Status

- Married

- Politics

- US-Others

Amazon recently terminated service for Parler on the basis of their inability to remove incitement, calls to violence, etc. which have been leading to problems in American political life.

Should they do the same for Twitter? Twitter continues to display incitement, calls for violence, etc. And Twitter likewise uses AWS.

Twitter Selects AWS as Strategic Provider to Serve Timelines | Amazon.com, Inc. - Press Room

Or should they not have removed Parler? What is your stance?

I have come across a few examples of such postings. See what you can find.

I did not see a way to report these, but that may be because I don't have an account at Twitter. If someone does, please feel free to report them.

In context "He" appears to be Jack Dorsey.

Yes, Amazon, Google, and Apple acted properly to shut the water off for Parler.

Context matters. Apple, Google, and Amazon acted within a certain context. The context colored their decisions and motives. The context separates Parler from Twitter.

Parler’s meteoric rise in popularity is traceable to an influx of right wingers, which was a byproduct of an exodus of right wingers from what they perceived as the censorship tyranny of Twitter, Facebook, etcetera. An article in WSJ may have been a catalyst for some of Parler’s high numbers. WSJ ran a story of the Trump Administration was looking for alternatives to Facebook and Twitter because of their censorship. WSJ suggested Parler, and two days later the app was number one in the news category for iPhone, and in 7 days surged 500,000 new members, from one 1 million to 1.5 million according to Matze.

Parler attracted a lot of Trump supporters. Parler also had some noteworthy names. Some notable names are Jim Jordan, Tim Cruz, Devin Nunes, and Rand Paul, to name a few. Some of them actively recruited more right wingers to Parler. Jim Jordan tweeted to his 1.4 million followers to transition to Parler. Devin Nunes advocated his 1.1 million followers, what a slacker compared to Jim’s numbers, to shift to Parler.

Parler, perhaps, was entirely a right wing fiesta. In fact, Matze offered a $20,000 bounty for a progressive or liberal, with 50,000 followers on Twitter or Facebook, to open a Parler account. Ostensibly, Matze aspired for Parler to more than a right wing echo chamber. If true, then he’s had nothing but disappointment with Parler.

Parler attracted this right wing group, a large number of this group with a strong sense of self-victimization and extremely loyal to Trump, as a conservative paradise of laissez faire censorship. Matze sold Parler as, “We're a community town square, an open town square, with no censorship,” "If you can say it on the street of New York, you can say it on Parler." Jim Jordan, House Rep from Ohio, described Parler to his followers as “don't censor or shadow ban.”

Of course a wild, wild, west approach to moderating comes with risks. Enter Parler’s indemnity clauses. Why moderate when you can indemnify yourself when a lack of moderation blows up in your face.

Parler’s near nonexistent moderating and aversion to deleting or removing much of anything facilitated all sorts of right wing calls for violence and a violent revolution. Trump’s lies, distortions, fomented the anger and exacerbated the calls for violence. All of this transpiring in a largely if not exclusively a right wing platform, perfect recipe for violence to be advocated, planned, etcetera, by many right wing supporters.

Parler was unique from Twitter and Facebook. Parler was disproportionately and exclusively a right winger platform, unlike Twitter and Facebook, where their extremist views and calls for violence met no resistance, no censorship, no moderation, and was allowed to spread like wildfire and infect many, in ways not very likely to happen on Facebook and Twitter because of Parler’s lax moderation.

Then the tragic events of 1/6 transpired. It didn’t take long for people to recognize Parler played a role, most likely are larger role than Twitter and Facebook for the reasons noted above. With calls for more violence in D.C. trending on Parler post 1/6, such calls for violence, which before 1/6 was more palatable as political firebranding on Parler, now cannot be tolerated at all post 1/6.

Simply, Twitter and Facebook were not and are not the party room in which right wingers gather in the millions and incite violence to a large receptive crowd, which is then perpetuated on 1/6. AWS treating Parler differently from Twitter is because Parler is uniquely different than Twitter. Parler, because of its characteristics above, is situated in a way that can better facilitate more violence by right wingers than Twitter or Facebook. Indeed, Parler members were not only involved in the protests at the Capitol building, but some entered the Capitol. Hence, Amazon rationally acted in regards to Parler in the manner they did.

One more thing. The tweets in your post which precede 1/6 aren’t persuasive, as they did not occur in the context outlined above. AWS acted in the context above, which includes the events of 1/6, and calls for more violence after 1/6. The risk of those threats of violence manifesting itself again, in part because of Parler, is what sets Parler apart from Twitter and Facebook, justifying disparate treatment, if any.

The tweets of violence posted above after 1/6 do not carry the same weight of risk of occurring, or of being legitimate threats, like the threats on Parler, because of the events of 1/6.

AWS acted prudently. Twitter and Facebook are distinguishable.

Upvote

0

- Sep 23, 2005

- 31,992

- 5,854

- Country

- United States

- Faith

- Christian

- Marital Status

- Married

Yes, Amazon, Google, and Apple acted properly to shut the water off for Parler.

Context matters. Apple, Google, and Amazon acted within a certain context. The context colored their decisions and motives. The context separates Parler from Twitter.

Parler’s meteoric rise in popularity is traceable to an influx of right wingers, which was a byproduct of an exodus of right wingers from what they perceived as the censorship tyranny of Twitter, Facebook, etcetera. An article in WSJ may have been a catalyst for some of Parler’s high numbers. WSJ ran a story of the Trump Administration was looking for alternatives to Facebook and Twitter because of their censorship. WSJ suggested Parler, and two days later the app was number one in the news category for iPhone, and in 7 days surged 500,000 new members, from one 1 million to 1.5 million according to Matze.

Parler attracted a lot of Trump supporters. Parler also had some noteworthy names. Some notable names are Jim Jordan, Tim Cruz, Devin Nunes, and Rand Paul, to name a few. Some of them actively recruited more right wingers to Parler. Jim Jordan tweeted to his 1.4 million followers to transition to Parler. Devin Nunes advocated his 1.1 million followers, what a slacker compared to Jim’s numbers, to shift to Parler.

Parler, perhaps, was entirely a right wing fiesta. In fact, Matze offered a $20,000 bounty for a progressive or liberal, with 50,000 followers on Twitter or Facebook, to open a Parler account. Ostensibly, Matze aspired for Parler to more than a right wing echo chamber. If true, then he’s had nothing but disappointment with Parler.

Parler attracted this right wing group, a large number of this group with a strong sense of self-victimization and extremely loyal to Trump, as a conservative paradise of laissez faire censorship. Matze sold Parler as, “We're a community town square, an open town square, with no censorship,” "If you can say it on the street of New York, you can say it on Parler."

In other words they said if it is legal speech you can say it. That is not saying they don't moderate, but saying what the line is for what would be moderated.

They are a platform. They spelled out that they are not liable. The poster is the one with liability for their statements.Of course a wild, wild, west approach to moderating comes with risks. Enter Parler’s indemnity clauses. Why moderate when you can indemnify yourself when a lack of moderation blows up in your face.

The Twitter terms of service also limit liability.

Twitter Terms of Service

Limitation of Liability

TO THE MAXIMUM EXTENT PERMITTED BY APPLICABLE LAW, THE TWITTER ENTITIES SHALL NOT BE LIABLE FOR ANY INDIRECT, INCIDENTAL, SPECIAL, CONSEQUENTIAL OR PUNITIVE DAMAGES, OR ANY LOSS OF PROFITS OR REVENUES, WHETHER INCURRED DIRECTLY OR INDIRECTLY, OR ANY LOSS OF DATA, USE, GOODWILL, OR OTHER INTANGIBLE LOSSES, RESULTING FROM (i) YOUR ACCESS TO OR USE OF OR INABILITY TO ACCESS OR USE THE SERVICES; (ii) ANY CONDUCT OR CONTENT OF ANY THIRD PARTY ON THE SERVICES, INCLUDING WITHOUT LIMITATION, ANY DEFAMATORY, OFFENSIVE OR ILLEGAL CONDUCT OF OTHER USERS OR THIRD PARTIES; (iii) ANY CONTENT OBTAINED FROM THE SERVICES; OR (iv) UNAUTHORIZED ACCESS, USE OR ALTERATION OF YOUR TRANSMISSIONS OR CONTENT.

This is in keeping with Section 230:

47 U.S. Code § 230 - Protection for private blocking and screening of offensive material

(1) Treatment of publisher or speaker

No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.

This has been interpreted very broadly.

In Force vs. Facebook it was ruled that Facebook was not liable for Hamas elements coordinating terrorist activities on Facebook.

In Delfino vs. Agilent Technologies Inc. it was found that Agilent, as a provider of email service, was not responsible for threats of harm from one employee to another employee.

If the company is an interactive computer service (which Twitter or Parler would be considered), then they cannot be treated as a publisher for information provided by another information content provider (In this case users making threats or inciting violence).

Exceptions exist for cases of knowingly supporting sex trafficking due to revision.

Parler’s near nonexistent moderating and aversion to deleting or removing much of anything facilitated all sorts of right wing calls for violence and a violent revolution.

I would say it was not their choice of what was worthy of moderation that caused the problem. They don't allow illegal statements and people still made illegal statements. Likewise Twitter, Facebook, Yahoo, etc. don't allow illegal statements, and people still made them during the lead up to and during the riots in DC. I posted an article documenting this.

I also posted examples of posters directing threats against those on the left on Twitter, both in the past, and in recent days.

And I posted questions from 2018 from Amnesty International wondering why they didn't handle death threats even back then, after repeated calls to do so.

Additionally I documented that the squad called out Twitter on their policy regarding threats and said they were threatened all the time.

So no, that doesn't answer it.

Trump’s lies, distortions, fomented the anger and exacerbated the calls for violence. All of this transpiring in a largely if not exclusively a right wing platform, perfect recipe for violence to be advocated, planned, etcetera, by many right wing supporters.

Yet the same happened in other platforms who still have many Trump supporters, and they have not all been moderated either.

Parler was unique from Twitter and Facebook. Parler was disproportionately and exclusively a right winger platform, unlike Twitter and Facebook, where their extremist views and calls for violence met no resistance, no censorship, no moderation, and was allowed to spread like wildfire and infect many, in ways not very likely to happen on Facebook and Twitter because of Parler’s lax moderation.

a. They do claim to moderate violent speech, but every platform was overwhelmed. The rest may as well be saying they deserved to be cut off because they were populated by people from one viewpoint.

b. How can you say not very likely to happen on Twitter when it has in the past, and continues to happen. Did you read the whole thread?

Then the tragic events of 1/6 transpired. It didn’t take long for people to recognize Parler played a role, most likely are larger role than Twitter and Facebook for the reasons noted above. With calls for more violence in D.C. trending on Parler post 1/6, such calls for violence, which before 1/6 was more palatable as political firebranding on Parler, now cannot be tolerated at all post 1/6.

Simply, Twitter and Facebook were not and are not the party room in which right wingers gather in the millions and incite violence to a large receptive crowd, which is then perpetuated on 1/6. AWS treating Parler differently from Twitter is because Parler is uniquely different than Twitter. Parler, because of its characteristics above, is situated in a way that can better facilitate more violence by right wingers than Twitter or Facebook. Indeed, Parler members were not only involved in the protests at the Capitol building, but some entered the Capitol. Hence, Amazon rationally acted in regards to Parler in the manner they did.

I already posted an article from the Independent that noted all of the platforms including Tik Tok, twitter, Facebook ,Youtube, etc. were used for staging. There is nothing about those platforms that kept it from happening. And it is still happening there.

One more thing. The tweets in your post which precede 1/6 aren’t persuasive, as they did not occur in the context outlined above. AWS acted in the context above, which includes the events of 1/6, and calls for more violence after 1/6. The risk of those threats of violence manifesting itself again, in part because of Parler, is what sets Parler apart from Twitter and Facebook, justifying disparate treatment, if any.

Some were before, some were after. And I posted an article discussing how all were used during.

You have not refuted any of this but said Parler is a Trump heavy platform. Agreed. But they were moderating the content. And so were Twitter and the rest. None of them could moderate all of it because it was a flood.

The tweets of violence posted above after 1/6 do not carry the same weight of risk of occurring, or of being legitimate threats, like the threats on Parler, because of the events of 1/6.

That is incorrect. Twitter was also used to coordinate 1/6, and the calls for violence are dangerous either way.

Last edited:

Upvote

0

- Mar 17, 2015

- 17,202

- 9,205

- Country

- United States

- Faith

- Christian

- Marital Status

- Married

The U.S. Supreme Court is the party you'd be looking for there, who has "silenced" some speech -- determined/fixed the limits on American free speech.Remind

Reminds me of the old days when Christianity also silenced their opponents, but in different ways..

You've heard the old example that you can't "yell fire in a crowded theater". Well, here's a representative list showing things the U.S. Supreme Court has decided:

"Freedom of speech and expression, therefore, may not be recognized as being absolute, and common limitations or boundaries to freedom of speech relate to libel, slander, obscenity, inappropriate contentography, sedition, incitement, fighting words, classified information, copyright violation, trade secrets, food labeling, non-disclosure agreements, the right to privacy, dignity, the right to be forgotten, public security, and perjury."

-- Freedom of speech - Wikipedia

And we can reasonably guess that the many conservative justices appointed to the court are not going to be liberalizing (relaxing) these limits. We might even speculate that the limits on free speech might have any loose ends tightened up, prudently, by conservative justices.

Last edited:

Upvote

0

SimplyMe

Senior Veteran

- Jul 19, 2003

- 9,723

- 9,443

- Country

- United States

- Faith

- Christian

- Marital Status

- Married

They have a volunteer staff, similar to CF, though CF's standards are far different.

They offered to ramp up an extra task force to deal with the recent calls for violence. This was not accepted by Amazon.

My understanding is that Twitter has some software moderation -- an algorithm that evaluates posts and determines if they violate the Terms of Service (ToS). The human moderators are there to catch the things the software moderator doesn't, as well as acting to correct things the software moderator may have incorrectly flagged. This software moderater makes it easier for the humans to find the posts that slip through, though it still a difficult job where things will be missed.

My understanding is that Amazon was saying that Parler needed to create a software moderator, that human moderators would not be quick enough, not to mention that too much "bad" content (content against the ToS) would slip through human moderation. Parler refused to do this, so Amazon followed through in cancelling their contract.

Upvote

0

Tanj

Redefined comfortable middle class

- Mar 31, 2017

- 7,682

- 8,316

- 59

- Country

- Australia

- Faith

- Atheist

- Marital Status

- Married

[/QUOTE]Amazon recently terminated service for Parler on the basis of their inability to remove incitement, calls to violence, etc. which have been leading to problems in American political life.

Should they do the same for Twitter? Twitter continues to display incitement, calls for violence, etc. And Twitter likewise uses AWS.

I think they should do as they like within the law and allow the free market to decide their fate.

Why, what do you think they should be forced to do, and by whom?

Upvote

0

- Sep 23, 2005

- 31,992

- 5,854

- Country

- United States

- Faith

- Christian

- Marital Status

- Married

I think they should do as they like within the law and allow the free market to decide their fate.

Why, what do you think they should be forced to do, and by whom?

Already answered if you read the whole thread.

Upvote

0

This site stays free and accessible to all because of donations from people like you.

Consider making a one-time or monthly donation. We appreciate your support!

- Dan Doughty and Team Christian Forums

- Sep 23, 2005

- 31,992

- 5,854

- Country

- United States

- Faith

- Christian

- Marital Status

- Married

My understanding is that Twitter has some software moderation -- an algorithm that evaluates posts and determines if they violate the Terms of Service (ToS). The human moderators are there to catch the things the software moderator doesn't, as well as acting to correct things the software moderator may have incorrectly flagged. This software moderater makes it easier for the humans to find the posts that slip through, though it still a difficult job where things will be missed.

My understanding is that Amazon was saying that Parler needed to create a software moderator, that human moderators would not be quick enough, not to mention that too much "bad" content (content against the ToS) would slip through human moderation. Parler refused to do this, so Amazon followed through in cancelling their contract.

Can you show the statement you are referencing? I hadn't seen anything that specific.

Of course, the algorithms didn't catch all of the content on Twitter either, and they are quite expensive.

ThatRobGuy spelled out some of the costs, limitations, and discussed the challenges for small sites, including using an AWS tool here:

The Deplatforming of President Trump

Upvote

0

since when is the present day old days?Remind

Reminds me of the old days when Christianity also silenced their opponents, but in different ways..

Upvote

0

- Sep 23, 2005

- 31,992

- 5,854

- Country

- United States

- Faith

- Christian

- Marital Status

- Married

Is it moral for us to force a business -- that is, other American running their own business -- to operate their business according to our personal preferences, past the letter of the law?

Of course, that answer is 'no'.

I agree Amazon should not be forced to do so. This was my response in post 32, but I don't think too many got that far into the thread:

As to my view I don't think either Parler or Twitter should be removed. Both are trying to remove calls for violence, and both have obvious gaps in their system.

All such moderation takes time, and in many cases it is never reported. Software detection can help, but is not failsafe either.

Amazon does not have to host anyone they do not want to. But I would like to see consistency.

Under section 230 both have the ability to moderate for content. And both have indicated they want to moderate calls for violence.

I wish people would self-regulate more and stop posting such messages in the meantime. We all have to decide not to feed violence, or partake in violence.

Here's the business side of their own (right to choose) choice:

"Amazon is a for-profit company.

To host Parler over the next few months or year they would make some modest amount of money .... Not a lot of money.

To Amazon it is not a lot of money. But it also is not a small amount. It works out to around 300k a month.

Amazon Is Suspending Parler From AWS

On Amazon Web Services, Parler had gone from a negligible spend to paying more than $300,000 a month for hosting, according to multiple sources.

But, hosting Parler at the same time Parler is hosting people planning to break the law in a serious way and do violence might expose Amazon to being sued in a huge lawsuit ....

$10,000 profit isn't much for risking what could even be $10 million or $100 million of liability from Parler's members actions over time ...."

Of course, this same kind of argument can apply regarding twitter (a vastly more profitable customer for them to serve), and I bet Amazon Web Services is going to think about that also (that's my guess).

Probably they will simply require Twitter to make an effort. (just my guess)

But, Twitter has evidently been making some effort. Just not enough I bet we agree.

Both Twitter and Parlor made an effort. It was acknowledged that Parler had a plan to make a task force to supplement their volunteer moderators, but this plan was rejected.

Amazon Is Suspending Parler From AWS

In an email obtained by BuzzFeed News, an AWS Trust and Safety team told Parler Chief Policy Officer Amy Peikoff that the calls for violence propagating across the social network violated its terms of service. Amazon said it was unconvinced that the service’s plan to use volunteers to moderate calls for violence and hate speech would be effective.

Twitter also makes tremendous efforts, but ultimately insufficient, because it is nearly impossible to find all of the instances.

However, that was the reason for section 230 in the first place. It shields platform providers for liability for statements made by third party users. They are not the speaker, they only host the speech.

47 U.S. Code § 230 - Protection for private blocking and screening of offensive material

(1) Treatment of publisher or speaker

No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.

This has been interpreted very broadly.

In Force vs. Facebook it was ruled that Facebook was not liable for Hamas elements coordinating terrorist activities on Facebook.

In Delfino vs. Agilent Technologies Inc. it was found that Agilent, as a provider of email service, was not responsible for threats of harm from one employee to another employee.

Not only is the protection very broad, but it also makes for rather straight-forward handling in many cases.

This article describes why section 230 is important to maintain the modern internet with various content providers It discusses benefits of section 230 when examining case history.

How Section 230 Enhances the First Amendment by Eric Goldman :: SSRN

A few highlights:

Section 230(c)(1)’s immunity does not vary with the Internet service’s scienter. If a plaintiff alleges that the defendant “knew” about tortious or criminal content, the defendant can still qualify for Section 230’s immunity.

While the First Amendment sometimes mandates procedural as well as substantive rules, Section 230 offers more procedural protections, and greater legal certainty, for defendants. These procedural benefits create speech-enhancing outcomes even in situations where the substantive scope of Section 230 and the First Amendment would be identical.

A prima facie Section 230(c)(1) defense typically has three elements: (1) the defendant is a provider or user of an interactive computer service, (2) the claim relates to information provided by another information content provider, and (3) the claim treats the defendant as the publisher or speaker of the information. Often, judges can resolve all three elements based solely on the allegations in the plaintiff’s complaint. Thus, courts can, and frequently do, grant motions to dismiss based on a Section 230(c)(1) defense. In jurisdictions with anti-SLAPP laws (which provide a litigation “fast lane” to dismiss lawsuits seeking to suppress socially beneficial speech), courts can grant anti-SLAPP motions to strike based on Section 230 without allowing discovery in the case.

In other words, platform providers can be rather certain that cases regarding liability for content users provide will be dismissed. And the cases usually don't get very far for this reason, meaning lower costs. That was why the provision was made, so that companies would not have to review every bit of speech on the platform and take it down at the first hint of complaint for liability reasons. This promotes more speech.

Legislation passed in 2018 did alter one aspect of that liability in the case of knowingly promoting sex trafficking ads, material, etc.

Last edited:

Upvote

0

- Sep 23, 2005

- 31,992

- 5,854

- Country

- United States

- Faith

- Christian

- Marital Status

- Married

But, hosting Parler at the same time Parler is hosting people planning to break the law in a serious way and do violence might expose Amazon to being sued in a huge lawsuit ....

$10,000 profit isn't much for risking what could even be $10 million or $100 million of liability from Parler's members actions over time ...."

Because they are shielded from liability, I think the larger concern was that they may get bad press from hosting them after recent events. Even though all the platforms had such content Parler was particularly singled out, and some politicians, Amazon employees, etc. were pointing out Amazon's connection to them.

Of course, Amazon knew what Parler was before now, but had not decided to act until the pressure was hot enough.

There is another aspect of this as well. Most people have accepted Twitter, etc. as normal parts of life now. However, some politicians have called for section 230 reform to change liability protections. This has occurred on both left and right. From some there is pressure to remove hate speech, to hold them liable for illegal speech, etc.

From others there is concern about the portion that allows good faith content editing. That was added because they wanted these companies to be able to remove objectionable content (at their discretion) without thereby becoming a publisher or being subject to that liability.

In other words, section 230 shields them from liability based on other people's speech on their platform. But content moderatino does not undercut that. Some could otherwise argue that editing objectionable content meant they were responsible for the speech that was remaining, that this amounted to editorial discretion. But the section was written to avoid this. And while it indicates here good faith, this has also been interpreted broadly to allow platforms to remove posts, edit posts, remove user accounts, etc.

Some politicians object to this right to remove and edit content, and allege view point discrimination (Ted Cruz, Tulsi Gabbard, etc.)

Amazon, and the rest of big tech, may be concerned that the politicians will remove or revise section 230 further and erode their protections. So if there is a platform that might intensify calls for removing hate speech, calls to violence etc. then it may not be good for the industry as a whole.

Upvote

0

This site stays free and accessible to all because of donations from people like you.

Consider making a one-time or monthly donation. We appreciate your support!

- Dan Doughty and Team Christian Forums

- Mar 17, 2015

- 17,202

- 9,205

- Country

- United States

- Faith

- Christian

- Marital Status

- Married

section 230 in the first place. It shields platform providers for liability for statements made by third party users. They are not the speaker, they only host the speech.

47 U.S. Code § 230 - Protection for private blocking and screening of offensive material

(1) Treatment of publisher or speaker

No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.

This has been interpreted very broadly.

In Force vs. Facebook it was ruled that Facebook was not liable for Hamas elements coordinating terrorist activities on Facebook.

In Delfino vs. Agilent Technologies Inc. it was found that Agilent, as a provider of email service, was not responsible for threats of harm from one employee to another employee.

Not only is the protection very broad, but it also makes for rather straight-forward handling in many cases.

This article describes why section 230 is important to maintain the modern internet with various content providers It discusses benefits of section 230 when examining case history.

How Section 230 Enhances the First Amendment by Eric Goldman :: SSRN

A few highlights:

Section 230(c)(1)’s immunity does not vary with the Internet service’s scienter. If a plaintiff alleges that the defendant “knew” about tortious or criminal content, the defendant can still qualify for Section 230’s immunity.

While the First Amendment sometimes mandates procedural as well as substantive rules, Section 230 offers more procedural protections, and greater legal certainty, for defendants. These procedural benefits create speech-enhancing outcomes even in situations where the substantive scope of Section 230 and the First Amendment would be identical.

A prima facie Section 230(c)(1) defense typically has three elements: (1) the defendant is a provider or user of an interactive computer service, (2) the claim relates to information provided by another information content provider, and (3) the claim treats the defendant as the publisher or speaker of the information. Often, judges can resolve all three elements based solely on the allegations in the plaintiff’s complaint. Thus, courts can, and frequently do, grant motions to dismiss based on a Section 230(c)(1) defense. In jurisdictions with anti-SLAPP laws (which provide a litigation “fast lane” to dismiss lawsuits seeking to suppress socially beneficial speech), courts can grant anti-SLAPP motions to strike based on Section 230 without allowing discovery in the case.

In other words, platform providers can be rather certain that cases regarding liability for content users provide will be dismissed. And the cases usually don't get very far for this reason, meaning lower costs. That was why the provision was made, so that companies would not have to review every bit of speech on the platform and take it down at the first hint of complaint for liability reasons. This promotes more speech.

Legislation passed in 2018 did alter one aspect of that liability in the case of knowingly promoting sex trafficking ads, material, etc.

Great to look at this closely. I appreciate the careful look.

Let me explain why I see a real risk of liability for AWS.

While AWS can meet the sec 230 criteria: AWS could be argued to be a co-"provider or user of an interactive computer service".

The law seem to me to have an intent that is about shielding for liability because the host of the service cannot know all that is going on. (I'm not a lawyer, and I'm only inferring based on general principles, so the following is my thinking only)

Normally, a provider of internet services would not know all that is going on in the forums it hosts....

Sec 230 is meant to shield them I think because of that general situation, so that they can operate without being liable for others' actions they don't know about.

And right there is the risk to AWS, as I see it.

It's become common public knowledge that Parler has discussions about doing violence.

So, that's a situation where we need to look closer.

Parler itself, and then by extension AWS once they became aware -- as we know that AWS did become aware -- have immunity about discussions aiming to break the law, do violence, but only according to the real meaning of sec 230....

See the problem?

The intent of sec 230 seems clearly to be to shield a provider from being liable for what they don't know is happening, essentially.

Consider this detail:

"Section 230, as passed, has two primary parts both listed under §230(c) as the "Good Samaritan" portion of the law. Section 230(c)(1), as identified above, defines that an information service provider shall not be treated as a "publisher or speaker" of information from another provider. Section 230(c)(2) provides immunity from civil liabilities for information service providers that remove or restrict content from their services they deem "obscene, lewd, lascivious, filthy, excessively violent, harassing, or otherwise objectionable, whether or not such material is constitutionally protected", as long as they act "in good faith" in this action.

In analyzing the availability of the immunity offered by Section 230, courts generally apply a three-prong test. A defendant must satisfy each of the three prongs to gain the benefit of the immunity:[8]

- The defendant must be a "provider or user" of an "interactive computer service."

- The cause of action asserted by the plaintiff must treat the defendant as the "publisher or speaker" of the harmful information at issue.

- The information must be "provided by another information content provider," i.e., the defendant must not be the "information content provider" of the harmful information at issue.

Section 230 - Wikipedia

So, that's why I think AWS would become liable if they did nothing -- at least on a criminal level, but I don't assume that's all (!) -- and sec 230 would not defend them, because it became common public knowledge what was going on, and also AWS definitely knew.

Part of how I take it as a genuine risk even for a civil liability is that we know it's normal that the police are shielded by law from liability. But...in the real world, that shielding doesn't work when the police allow especially egregious abuse to happen. Cities end up paying out huge settlements to victims of egregious police abuses. Generally a law has an intent that isn't aimed to create an obvious injustice. This is why I think even AWS could become civilly liable if they did not act.

But, AWS did act though we've heard to repeatedly tell Parler they must moderate, and then acted to terminate.

Because AWS did act, I think they are not going to be held liable.

But if AWS didn't act, then I think they would end up being liable.

Last edited:

Upvote

0

gaara4158

Gen Alpha Dad

- Aug 18, 2007

- 6,437

- 2,685

- Country

- United States

- Faith

- Humanist

- Marital Status

- Married

- Politics

- US-Others

Dropping Parler was a business decision made by a private company, as was the decision for the myriad of social media platforms to ban Trump. If you think these users and platforms are entitled to a space online, you’ll have to publicize — or socialize — the social media industry. I’m all for that, but I thought you people believed in a free, unregulated market consisting of private businesses rather than government-run programs. Is this a flip-flop?

Upvote

0

- Sep 23, 2005

- 31,992

- 5,854

- Country

- United States

- Faith

- Christian

- Marital Status

- Married

Section 230 immunity is not unlimited. The statute specifically excepts federal criminal liability (§230(e)(1)),

So, that's why I think AWS would become liable -- at least on a criminal level, but I don't assume that's all (!) -- and sec 230 would not defend them, because it became common public knowledge what was going on, and also AWS definitely knew.

The case law has largely not upheld that. Take a look at this document from the justice department discussing the need for possible reform to address the broad interpretation given by the courts. This is from the Trump Justice department from June, 2020.

The combination of significant technological changes since 1996 and the expansive interpretation that courts have given Section 230, however, has left online platforms both immune for a wide array of illicit activity on their services and free to moderate content with little transparency or accountability.

One of the suggested reforms:

Case-Specific Carve-Outs for Actual Knowledge or Court Judgments. Third, the Department supports reforms to make clear that Section 230 immunity does not apply in a specific case where a platform had actual knowledge or notice that the third party content at issue violated federal criminal law or where the platform was provided with a court judgment that content is unlawful in any respect.

They want to revise the law to make it so that immunity does not apply in cases where there was prior knowledge. But the reason they want that reform is that the current practice is to not hold them liable.

Upvote

0

This site stays free and accessible to all because of donations from people like you.

Consider making a one-time or monthly donation. We appreciate your support!

- Dan Doughty and Team Christian Forums

- Mar 17, 2015

- 17,202

- 9,205

- Country

- United States

- Faith

- Christian

- Marital Status

- Married

Like a gray area, when they know what is happening.The case law has largely not upheld that. Take a look at this document from the justice department discussing the need for possible reform to address the broad interpretation given by the courts. This is from the Trump Justice department from June, 2020.

The combination of significant technological changes since 1996 and the expansive interpretation that courts have given Section 230, however, has left online platforms both immune for a wide array of illicit activity on their services and free to moderate content with little transparency or accountability.

One of the suggested reforms:

Case-Specific Carve-Outs for Actual Knowledge or Court Judgments. Third, the Department supports reforms to make clear that Section 230 immunity does not apply in a specific case where a platform had actual knowledge or notice that the third party content at issue violated federal criminal law or where the platform was provided with a court judgment that content is unlawful in any respect.

They want to revise the law to make it so that immunity does not apply in cases where there was prior knowledge. But the reason they want that reform is that the current practice is to not hold them liable.

That's where I'm speculating based on general principles.

A quick re-look at the general principles (I like to review things we all sorta already know, because there is often more to it, and sometimes I see something new) --

A publisher is potentially liable for a book that breaks the law in some serious way since they know what is being published (they edited it and so on), but a distributor of the books is not, since they don't know what is in the books they are distributing. That's straightforward as we'd expect: you are not liable for what you do not know.

Consider something akin to a planned attack, in an analogy -- a package delivery service is not liable for delivering a bomb in a package, because they don't know what is in the package.

But, if it becomes totally clear that the delivery service definitely knew a bomb was in the package, and then went ahead and delivered it anyway, leading to an explosion that causes death and destruction, we all would expect that the delivery service is at least partly liable, because they knew. They become culpable.

A law cannot really aim to do an injustice (and hold up under testing in courts). It only holds really when it is fair/just. The intent of the law becomes relevant.

Maybe a really good other example is the instance of the manufacture of opioids.

Once it became clear the deaths the drugs were causing, then after this became clear, a liability began to arise, right?

Upvote

0

Similar threads

- Replies

- 0

- Views

- 199

- Replies

- 1

- Views

- 333

- Replies

- 0

- Views

- 210

- Replies

- 0

- Views

- 239

- Replies

- 0

- Views

- 465