We humans have created AI and its definition as described by computer science defines the objective for AI.I guess that's technically correct but there must be hundreds/thousands(?) of person years of human research gone into: trial/error statistics, game theory and search algorithm experience etc, that's been poured into distilling the present 'winning formula'/algorithm choices?

Exactly how that might manifest itself in producing the final product, has sort of been lost in all the number crunching, I think(?)

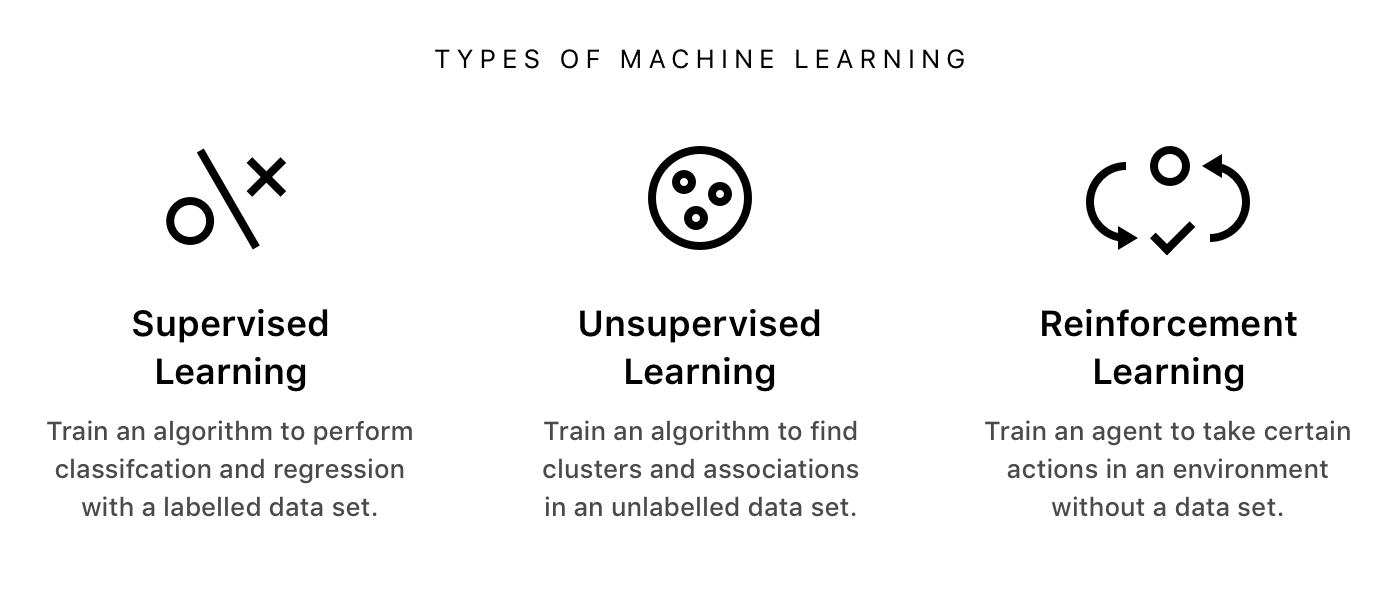

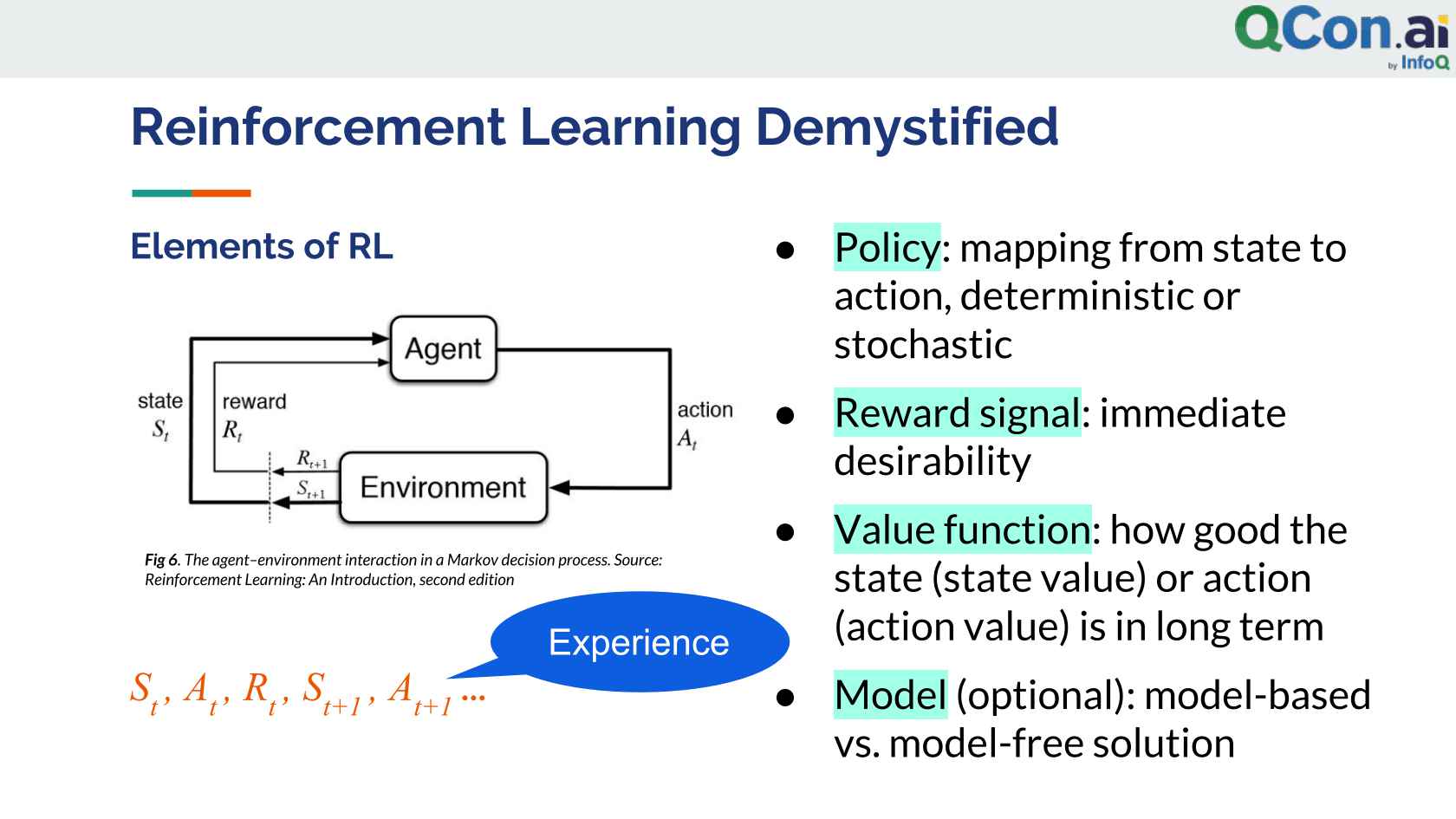

Computer science defines AI research as the study of "intelligent agents": any device that perceives its environment and takes actions that maximize its chance of successfully achieving its goals. A more elaborate definition characterizes AI as "a system's ability to correctly interpret external data, to learn from such data, and to use those learnings to achieve specific goals and tasks through flexible adaptation”.

Upvote

0